Quantum computing introduces a fundamental shift in how infrastructure, including data centers, is planned, designed, and constructed. However, opinion is split on exactly when quantum computing will move beyond experimental phases to become a practical reality for data center operations.

A recent University of Phoenix survey of 1,000 U.S. IT professionals indicated that quantum computing will be an important emerging technology in 2025.

Yet while nearly half of those surveyed said they believe quantum computing would disrupt IT infrastructure within a decade, many remain unsure about its exact impact.

With the data center industry facing unprecedented demands for computing power and energy efficiency, we canvassed some experts to hear their thoughts on the quantum computing timeline and its potential impact on the sector.

A Complex Problem

Quantum computing uses the principles of quantum mechanics to perform calculations by manipulating quantum bits (qubits) to solve complex problems much faster than traditional computers.

As compute demands soar and energy constraints tighten, governments and tech giants are partnering to advance quantum computing.

In July 2024, IBM and Japan’s National Institute of Advanced Industrial Science and Technology joined forces to develop next-generation quantum computers.

Related:Microsoft Unveils Quantum Computing Chip for Future Data Centers

While implications for data center operations could be profound, with IBM tapping QC tech for data centers in New York and Germany, the challenges are equally weighty.

Todd Johnson, vice president of application engineering at the University of Phoenix, tells Data Center Knowledge that, unlike classical computing, quantum machines need highly specialized environments – think cryogenic cooling, electromagnetic shielding, and vibration isolation.

“These requirements will absolutely reshape how future data centers are designed, not just physically but also in how they operate and secure workloads,” he says.

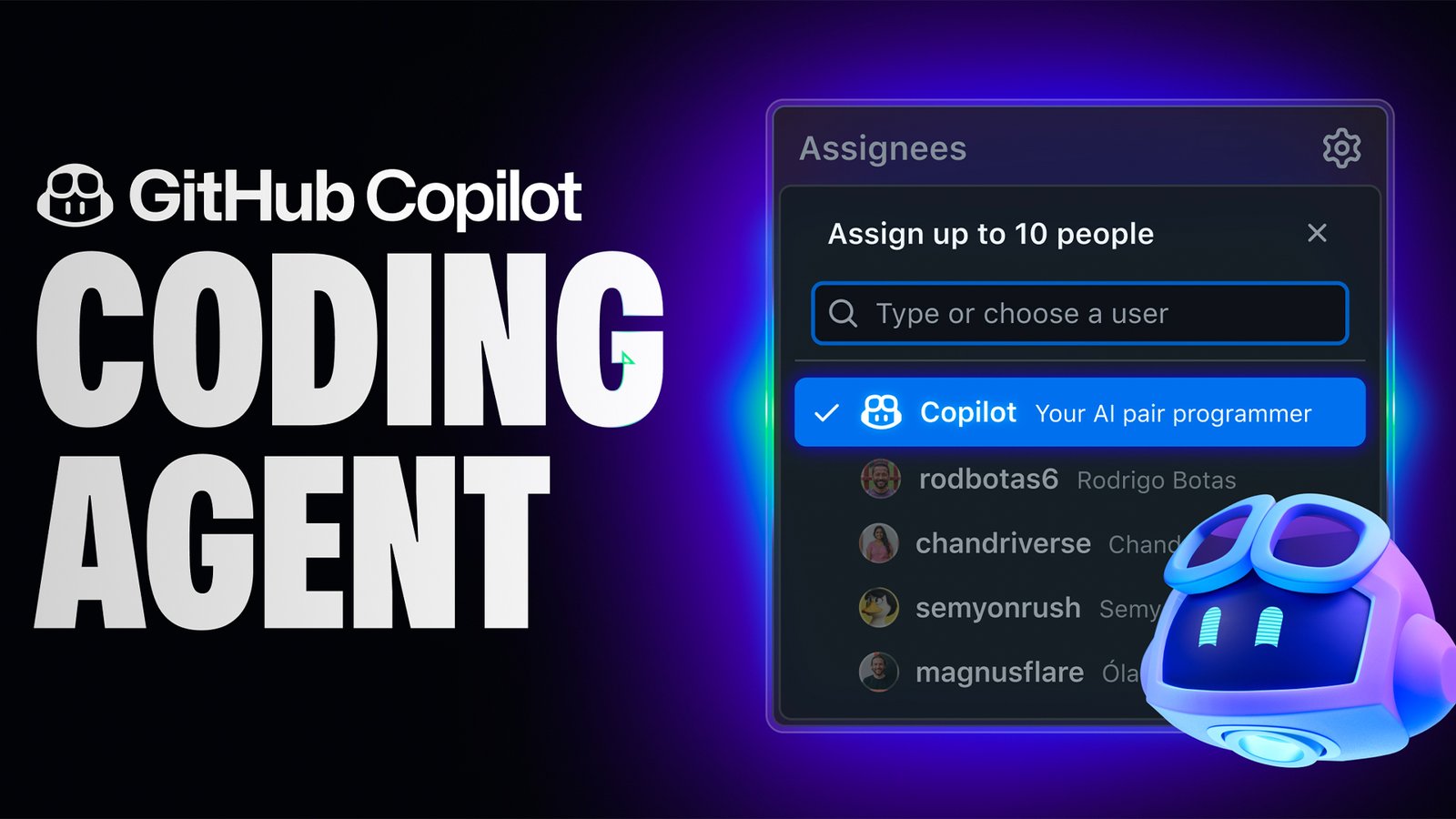

Johnson notes that companies, including AWS, are already integrating quantum computing into their ecosystems through services like Amazon Braket, while Microsoft Azure is offering flexible quantum resources via Azure Quantum.

These platforms are laying the groundwork for hybrid quantum-classical environments that minimize the need for on-prem quantum hardware, meaning future data centers might be less about physical space and more about cloud-native orchestration.

IBM’s Heron chip offers up to a 16-fold increase in performance and 25-fold increase in speed over previous IBM quantum computers. Image: IBM.

Infrastructure Intensive, Environmentally Fragile

Johnson outlines the way quantum computing “flips the script” regarding energy consumption and cooling needs in data center environments.

Related:Google’s Willow Chip: Quantum Leap or Quantum Hype?

“While the actual computations are more efficient, the environment needed to keep quantum machines running, especially the cooling to near absolute zero, is extremely energy-intensive,” he says.

When companies move their infrastructure to cloud platforms and transition key platforms like CRM, HCM, and Unified Comms Platform to cloud-native versions, they can reduce the energy use associated with running large-scale physical servers 24/7.

“If and when quantum computing becomes commercially viable at scale, cloud partners will likely absorb the cooling and energy overhead,” Johnson says. “That’s a win for sustainability and focus.”

Alexander Hallowell, principal analyst at Omdia’s advanced computing division, says that unless one of the currently more “out there” technology options proves itself (e.g., photonics or something semiconductor-based), quantum computing is likely to remain infrastructure-intensive and environmentally fragile.

“Data centers will need to provide careful isolation from environmental interference and new support services such as cryogenic cooling,” he says.

He predicts the adoption of quantum computing within mainstream data center operations is at least five years out, possibly “quite a bit more.” He adds that it is impossible to predict how the technology’s adoption might influence the size, layout, and infrastructure priorities of next-generation data centers.

Related:What Is Quantum Networking, and What Might It Mean for Data Centers?

“It is certain quantum computing will be an adjunct to lots of classical computing power and GPUs rather than a replacement,” Hallowell says.

Conversations Between Quantum and Classical

As Johnson sees it, the big challenge is creating a hybrid model where quantum and classical systems can talk to each other efficiently.

“Today’s apps and platforms are built for binary logic; quantum introduces probabilistic logic – that’s a whole different ballgame,” he says.

Integrating interfaces, middleware, and orchestration tools that can leverage quantum for its strengths, like optimization problems and complex simulations, while continuing to run everything else on classical systems is a challenge.

“Moving to cloud-native apps may put companies in a good position as platforms may already be starting to build quantum into their roadmaps,” he says.

This means getting the benefits of quantum innovation without having to rebuild everything themselves.

“It’s not about replacing classical computing – it’s about enhancing it where it makes sense,” Johnson explains.

Space, Time, Expertise – and Costs

The high costs associated with quantum computing will initially be a major challenge for many smaller to mid-sized companies with data centers.

Rob Clyde, former ISACA board chair and chairman of Crypto Quantique, says a quantum computer, including the refrigeration unit, can cost up to $100 million at scale.

He adds that there are currently few people who have specialized expertise in quantum computing operations and programming.

“As we will need a qualified workforce to run and write code for these quantum computers, this is a significant issue that will need to be solved through training and education measures,” Clyde says.

Quantum computers also lack high-level programming languages like those that exist for classical computers – something Clyde notes the industry is working to solve, but it will take time.

He explains that data centers must also have the space to accommodate the requirements of a quantum computer, which might take up a hundred or more square feet because of the refrigeration unit.

“Data centers will need to be designed with this in mind,” he says. “Of course, this could change if future quantum computers no longer needed this extreme cooling requirement.”

He adds that the layout must consider that the quantum computer can’t be placed next to the classical computer system without appropriate shielding because the noise impacts it and increases the error rate.

Then there is the matter of how the data centers network the quantum and classical computers together and set up the networking infrastructure within the data center.

“At first, this will likely be done in a customized way within each data center, but eventually off-the-shelf solutions and standards will be developed to make this much more manageable,” Clyde says.

Along the way will be the creation of standards and protocols, which will allow data centers and businesses to figure out how to interconnect much better.

“A lot of smart people are working on these issues furiously,” Clyde says. “We could see significant progress over the next couple of years.”